i> 本科毕设题目为《基于深度学习的图像去雾方法研究与实现》

毕设总结系列:

- 毕设总结零–绪论

- 毕设总结一–传统去雾理论

- 毕设总结二–论文复现工作1–暗通道去雾(DCP)

- 毕设总结三–论文复现工作2–MSCNN去雾

- 毕设总结四–最终去雾方案的确定与实现–DeBlurGanToDehaze

- 毕设总结五–杂项

1. 前言·

上一篇文章中,我们详细讲解分析了传统去雾方法DCP的原理及实现,本篇文章中,我们再来谈谈近年来火热的“深度学习”以及如何应用深度学习进行去雾。

本篇中的所有代码均已开源,地址将放于文末。

2. 一点题外话–浅谈深度学习·

自2012年ILSVRC竞赛中,基于深度学习的AlexNet已绝对优势获得冠军后,深度学习开始爆热。深度学习几乎成为AI的代名词,现已被运用到如自然语言处理、图像分类、语音识别等等诸多领域。要学习deep learning, 首先要知道什么是深度学习,以及”人工智能“、“机器学习“、”深度学习“的区别。

引用一篇博文中的定义,深度学习可这样定义:

1.wiki:深度学习是机器学习的分支,它试图使用包含复杂结构或者由多重非线性变换构成的多个处理层对数据进行高层抽象的算法。 2.李彦宏:简单的说,深度学习就是一个函数集,如此而已。 3.深度学习将特征提取和分类结合到一个框架中,用数据学习特征,是一种可以自动学习特征的方法。 4.深度学习是一种特征学习方法,把原始的数据通过非线性的复杂模型转换为更高层次、更抽象的表达。

下图很直观的展示这三者之间的关系。

再来谈谈近年来深度学习中的主角,卷积神经网络(CNN)。它有什么特点呢?一篇经典的文章里是这样描述它的:

Convolutional Neural Networks (ConvNets or CNNs) are a category of Neural Networks that have proven very effective in areas such as image recognition and classification. ConvNets have been successful in identifying faces, objects and traffic signs apart from powering vision in robots and self driving cars.

也就是说,它特别擅长图像识别处理与分类。

这里推荐几篇文章学习CNN与了解CNN的进化史:

- An Intuitive Explanation of Convolutional Neural Networks

- CNN可视化

- CNN概念之上采样,反卷积,Unpooling概念解释 - g11d111的博客 - CSDN博客

- CNN视频教程

- CNN网络架构演进:从LeNet到DenseNet - Madcola - 博客园

3. MSCNN去雾·

上小节算是一点题外话了,但是这篇的主题是基于深度学习的去雾,那么至少要知道什么是深度学习以及深度学习中最常用的CNN吧。

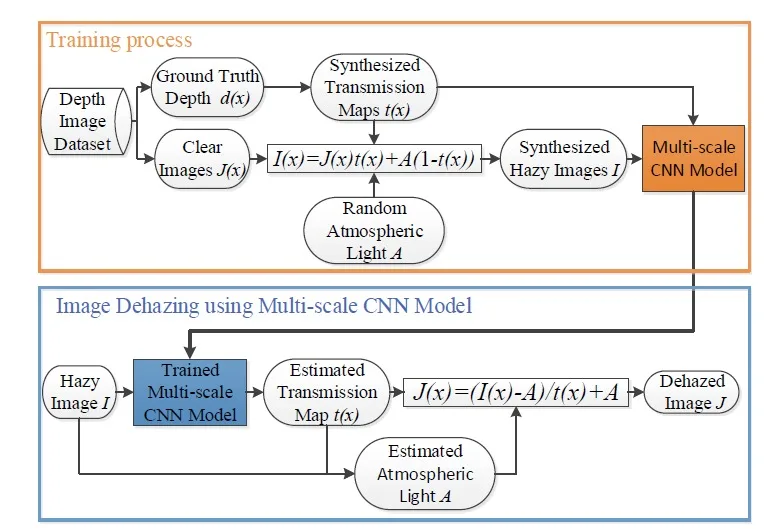

MSCNN,全称:《Single Image Dehazing via Multi-scale Convolutional Neural Networks》,由Ren等人于2016年提出。其思想在于学习雾图与其对应的透射图之间的映射关系,在基于某种算法估计大气光A,最后应用大气散射模型恢复出雾图。(不懂大气散射模型去雾的可参考本系列总结一)

个人认为这篇文章有3个核心关注点:

- 提出了一种多尺度训练网络,这个网络分为了粗颗粒估计和细颗粒估计两个子网络。

- 采用NYU数据集合成出训练网络所有的雾图-透射图训练对。

- 利用估计出来的透射图和大气光,利用大气散射模型去雾。

3.1 MSCNN中的网络结构·

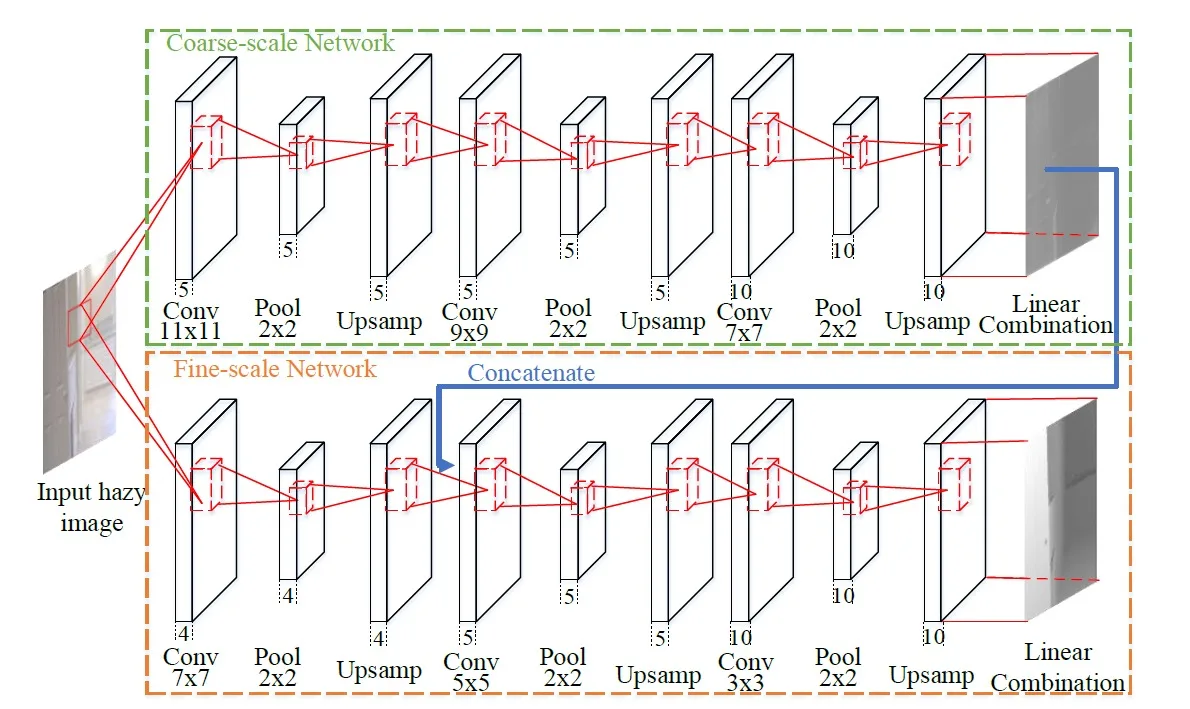

先上一章总图:

可以看到上半部为Coarse Net,下半部分为其Fine Net。由Coarse Net估计出Coarse Transmission Map,然后将它级联到Fine Net的第一个Upsample层后,在进行精细提取。简单说一下它的参数设置:

每一个长方体代表的是特征图,下面的数字代表的是卷积核的个数,下面的文字代表相应的操作和操作的size。

训练损失函数上,MSCNN采用了MSE函数,即:

$$ L(t_i(x),t_i^{\delta}(x)) = \frac{1}{q}\sum_{i=1}{q}||t_i(x)-t_i{\delta}(x)||^2 $$

其中,q代表的是一个batch size中的雾图个数。

其余参数:

- 采用sgd优化器,动量参数设置为0.9

- batch size 设置为100

- 输入图进行归一化处理,统一为320*240

- 学习率设置0.01并且每20个epoch衰减0.1

- 总epoch设置为70

3.2 训练数据合成·

作者采用了NYU数据集来构建训练集。NYU数据集提供了清晰图与对应的深度图。合成公式: $$ \left\{ \begin{array}{l} t(x) = e^{-\beta d(x)}\\ I(x) = t(x) J(x) + A(1-t(x)) \end{array} \right. $$ 现在已知d(x)和J(x),通过随机取$\beta$和$A$,即可得到$t(x)$ 进而 获取$I(x)$雾图。详细的合成方法可参考:

- Benchmarking Single Image Dehazing and Beyond

- RESIDE

3.3 整个去雾流程·

4. 搭建MSCNN网络结构参考代码·

代码基于Keras结构,不过MSCNN有MATLAB版本和Tensorflow版本,连接都放在了文末。

4.1 设置超参数和辅助参数·

1 | self.model_dir_path = './model_save' |

4.2 建立coraseNet·

1 | def _build_coarseNet(self, input_img): |

LinearCombine为一个自定义层:

1 | class LinearCombine(Layer): |

4.3 建立FineNet·

1 | def _build_fineNet(input_img, coarseNet): |

4.4 设置训练的参数·

1 | # 设置优化器,损失函数等 |

4.5 全代码·

1 | """ |

4.6 其他·

代码中还有很多需要注意的地方,如接力学习,训练数据对的统一化处理,显存不足时,不应当将所有图片一次性加入到内存中,而应该采用generator的方式来训练,train过程多少epoch或batch需要sample一次等等。这些代码都可以参考文末的总代码,这里不可能一一道尽。

参考·

- Single Image Dehazing via Multi-scale Convolutional Neural Networks

所有源代码地址:

- https://github.com/raven-dehaze-work/MSCNN_Keras

- https://github.com/raven-dehaze-work/MSCNN_MATLAB

- https://github.com/dishank-b/MSCNN-Dehazing-Tensorflow